The increasing prevalence of deepfakes raises concerns regarding privacy threats, misinformation, and the pressing need for legal protections.

Deepfake technology uses artificial intelligence to create highly realistic videos, images, or audio recordings. (Instagram)

In recent times, the emergence of deepfake technology has ignited concerns and conversations across various industries.

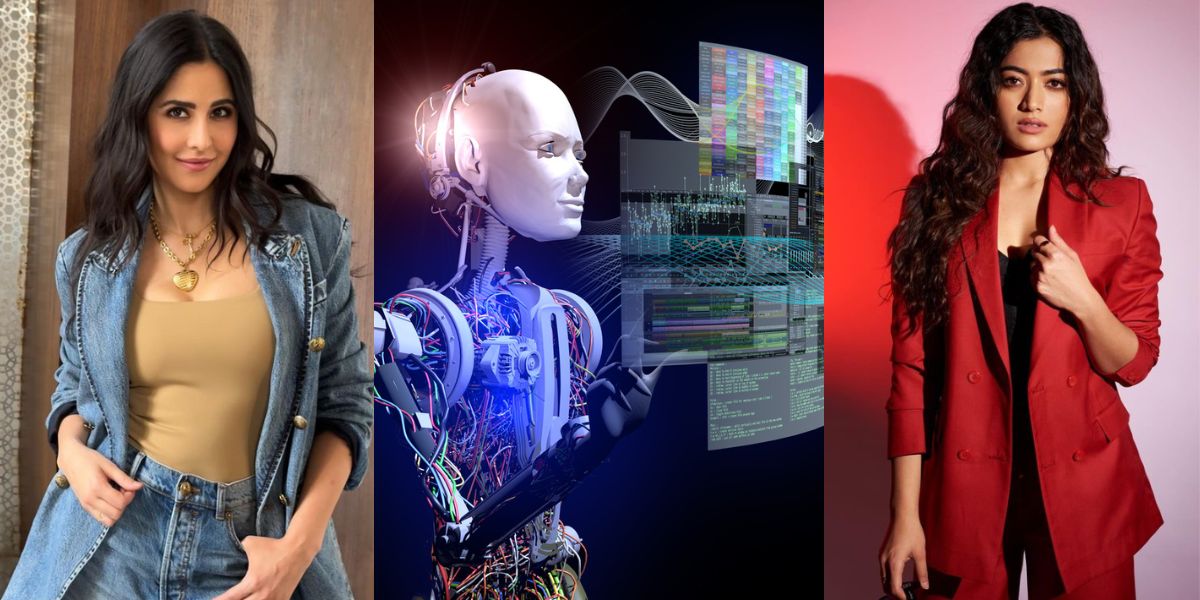

With actresses like Rashmika Mandanna and Katrina Kaif — not to speak of politicians like Tamil Nadu IT Minister P Thiaga Rajan — falling victim to manipulated videos and images, the dark side of Artificial Intelligence (AI) has emerged, raising questions about privacy, misinformation, and the urgent need for robust legal safeguards.

Just as Rashmika Mandanna’s fans were recovering from the viral deepfake video of the actress, supporters of Katrina Kaif were shocked by manipulated images of the actress, recently.

From her upcoming film Tiger 3, Kaif shared an action sequence where she was wearing only a towel, on her social media handles just a few days ago.

The new AI-generated images depict her in a top with a plunging neckline and boxers, instead.

It is unclear whether the same individual is responsible for both deepfake videos causing a stir in the film industry, but what is evident is that while AI is a boon, it can also be a bane.

Mandanna’s face was superimposed on British-Indian influencer Zara Patel’s body using AI technology. In response to the deepfake video that shows her entering an elevator, Rashmika shared a tweet.

I feel really hurt to share this and have to talk about the deepfake video of me being spread online.

Something like this is honestly, extremely scary not only for me, but also for each one of us who today is vulnerable to so much harm because of how technology is being misused.…

— Rashmika Mandanna (@iamRashmika) November 6, 2023

“It’s a grave offence. Invading someone’s personal space, and body-shaming can be emotionally devastating for the individuals affected. Of course, Rashmika is an actor, and she says she has a support system to see her through this, but not everyone is as fortunate, especially in schools and colleges,” said retired Lt Gen Iqbal Singh Singha, director of Global and Government Affairs at TAC Security, a cybersecurity firm.

Deepfake technology uses AI to create highly realistic videos, images, or audio recordings. It can convincingly imitate your voice and appearance and can be used for both beneficial and harmful purposes, raising concerns about privacy and the spread of misinformation.

Its potential to harm women is particularly troubling. The prevalence of deepfakes has increased significantly in recent years.

Rashmika Deep fake viral.

The increasing menace of technology being put to ill-use needs to be hammered down strongly.

Although we are aware of the existence of such miscreants, we still tend to get carried away by what we see.

From WYSIWYG (What You See Is What You Get) to…

— Iyan Karthikeyan (@Iyankarthikeyan) November 7, 2023

During the Russia-Ukraine conflict, an alarming deepfake video showed Ukrainian President Volodymyr Zelenskyy allegedly ordering his soldiers to surrender, highlighting its significance in the information war. Deepfake videos of Indian politicians have also circulated in the past.

In April, Palanivel Thiaga Rajan, then finance minister of Tamil Nadu, took to Twitter (now X) to allege that a “blackmail gang” was making a “desperate attempt” to disparage him and the ruling DMK in the state by releasing fabricated audio clips.

His video message came a day after Tamil Nadu BJP chief K Annamalai released an audio clip claiming to contain the voice of PTR purportedly talking about the influence wielded by Udhayanidhi Stalin and Sabareesan — the son and son-in-law, respectively, of Chief Minister MK Stalin.

According to Singha, this also implies a diminishing sense of personal space. “In the case of actors, it could be a fan turning hostile, undermining them. It may give a sense of power, but it’s unfortunate,” Singha added.

Rushikesh Prakash Borse, assistant professor at the Great Lakes Institute of Management, Chennai, with 12 years of experience in AI and Machine Learning (ML), believes technology can’t be blamed for everything.

“However, this is an important issue because people in India lead very social lives. In places like the UK, the US, and Europe, if someone wants to take a photograph of a beggar, they need to seek permission. This deepfake is based on generative networks, which is a recent advancement in deep learning models. It plays a crucial role in research, especially when creating your own data becomes challenging,” he says.

PM @narendramodi ji’s Govt is committed to ensuring Safety and Trust of all DigitalNagriks using Internet

Under the IT rules notified in April, 2023 – it is a legal obligation for platforms to

➡️ensure no misinformation is posted by any user AND

➡️ensure that when reported by… https://t.co/IlLlKEOjtd

— Rajeev Chandrasekhar 🇮🇳 (@Rajeev_GoI) November 6, 2023

“This technology allows you to create your own data for research and prove a concept or a logic. It’s not a new concept, as people have previously taken vulgar photos and posted them online. It’s just that our copyright rules are weak. We shouldn’t blame technology for this. Technological advancement will always be there. Just like there are medications required for illness, which can make a healthy person drowsy if taken, technology has its dual nature,” added Borse.

Maneesh Chaturvedi, a software engineer working for an MNC in Bengaluru, agrees that technology is a double-edged sword. “We need to keep pace with it. As technology evolves, so should our legal system. Our laws must change to make these actions punishable,” emphasised Chaturvedi.

While we have provisions to deal with cybercrime in our Information Technology Act of 2000 and the Copyright Act of 1957, many grey areas make it challenging to address deepfakes specifically.

However, Chaturvedi believes there are several ways to mitigate their threats.

“Multi-factor authentication like OTPs, liveness tests for biometrics, and creating digital identities based on blockchain are some of the ways to curb them. Digital identity is akin to taking ownership of your virtual presence,” said the Bengaluru-based techie.

Another way to tackle deepfakes is by conducting a reverse image search and identifying the source of the content. (Stock)

Another way to tackle deepfakes is by conducting a reverse image search and identifying the source of the content.

According to Dr Syed Mohammad Meesum, assistant professor of Computer Science and Discipline Coordinator for Computer Science at Krea University, “As of today, there are always cues and giveaways that reveal a video is fake. It’s not very difficult to spot. Careful examination will reveal mismatched lip sync and hand movements.”

Deepfakes convincingly alter audiovisual content, making it difficult for people to distinguish truth from fiction. Superimposing images and manipulating voices not only pose privacy risks but also lead to potential blackmail or defamation.

False narratives and misinformation can weaken relationships and institutions. The consensus is that there is an urgent need for strong laws, trained personnel, and robust detection mechanisms to protect privacy and information integrity.

Jul 17, 2024

Jul 13, 2024

Jun 23, 2024

May 27, 2024

Apr 22, 2024

Mar 16, 2024